K8S 企业容器云07 - 生产环境建设方案

本文档为生产环境 Kubernetes集群,并包括 Calico、Helm、StorageClass(Rook-Ceph)、Ingress(Nginx)、Cert-manager(CA & ACME)

环境准备

本集群分为 3台 Master节点,2台 Worker节点;

此外,Master2 及 Master3 还承载 Master.api、Ingress出口及 Worker功能

Keepalived

Master2、Master3 承载虚地址(VIP-10.135.204.48)

wx-cloudnative-master-2 & wx-cloudnative-master-3 上执行

# yum install keepalived -y

# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id CloudNative

}

vrrp_instance VI_1 {

state BACKUP

interface bond0

virtual_router_id 51

priority 50

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.135.204.48

}

}

# systemctl restart keepalived

各节点系统基础配置

域名解析

# vim /etc/hosts

10.135.204.2 wx-cloudnative-master-1

10.135.204.44 wx-cloudnative-master-2

10.135.204.45 wx-cloudnative-master-3

10.135.204.46 wx-cloudnative-worker-1

10.135.204.47 wx-cloudnative-worker-2

10.135.204.48 wx-cloudnative-master.api

安全

# systemctl stop firewalld && systemctl disable firewalld

# yum install vim wget ntp -y

# vim /etc/selinux/config

SELINUX=disabled

SELINUXTYPE=targeted

# setenforce 0

# swapoff -a

替换阿里源

# mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo_bak

# wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

# wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

# yum clean all && yum makecache

# yum update -y

# timedatectl set-timezone Asia/Shanghai

# timedatectl

# timedatectl set-ntp true

Docker

安装、添加国内仓库

# yum install yum-utils device-mapper-persistent-data lvm2 -y

# yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# yum install containerd.io docker-ce docker-ce-cli -y

# systemctl enable docker && systemctl start docker

# cat > /etc/docker/daemon.json <<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"registry-mirrors":[

"https://32lrw8s6.mirror.aliyuncs.com",

"http://hub-mirror.c.163.com",

"https://docker.mirrors.ustc.edu.cn",

"https://registry.docker-cn.com"

]

}

EOF

# mkdir -p /etc/systemd/system/docker.service.d

# systemctl daemon-reload

# systemctl restart docker

# docker info

kubeadm kubectl kubelet

安装

# cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

# cat <<EOF > /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

# sysctl --system

# yum install kubeadm-1.18.3 kubelet-1.18.3 kubectl-1.18.3

# systemctl start kubelet

# systemctl enable kubelet

Master1 操作

kubeadm

创建集群

# kubeadm init \

--apiserver-advertise-address 0.0.0.0 \

--apiserver-bind-port 6443 \

--cert-dir /etc/kubernetes/pki \

## 为控制平面指定一个稳定的 IP 地址或 DNS 名称

--control-plane-endpoint wx-cloudnative-master.api \

--image-repository registry.cn-hangzhou.aliyuncs.com/google_containers \

--kubernetes-version 1.19.2 \

## 指定 pod的 IP地址范围

--pod-network-cidr 10.10.0.0/16 \

## 指定 Service的VIP地址范围

--service-cidr 10.20.0.0/16 \

## 为 Service指定域名 cloudnative.wx

--service-dns-domain cloudnative.wx \

--upload-certs

查看集群

# mkdir -p $HOME/.kube

# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

# chown $(id -u):$(id -g) $HOME/.kube/config

# kubectl cluster-info

Kubernetes master is running at https://wx-cloudnative-master.api:6443

KubeDNS is running at https://wx-cloudnative-master.api:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

Calico

获取 YAML

# mkdir calico && cd calico

# wget https://docs.projectcalico.org/manifests/calico.yaml

目前版本的 calico YAML可以自动读取目前配置中的 pod CIDR

# kubectl apply -f calico.yaml

查看运行状态,coredns也恢复正常运行

# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-578894d4cd-sfwmx 1/1 Running 0 97s

kube-system calico-node-cwmkr 1/1 Running 0 98s

kube-system coredns-546565776c-bq22h 1/1 Running 0 4h54m

kube-system etcd-wx-cloudnative-master-1 1/1 Running 0 4h55m

kube-system kube-apiserver-wx-cloudnative-master-1 1/1 Running 0 4h55m

kube-system kube-controller-manager-ck01 1/1 Running 0 4h55m

kube-system kube-proxy-ksc6b 1/1 Running 0 4h54m

kube-system kube-scheduler-wx-cloudnative-master-1 1/1 Running 0 4h55m

Helm

安装

# wget https://get.helm.sh/helm-v3.3.3-linux-amd64.tar.gz

# tar -zxvf helm-v3.3.3-linux-amd64.tar.gz

# mv linux-amd64/helm /usr/local/bin/helm

# helm version

version.BuildInfo{Version:"v3.3.3",

GitCommit:"55e3ca022e40fe200fbc855938995f40b2a68ce0",

GitTreeState:"clean", GoVersion:"go1.14.9"}

添加阿里 chart仓库

# helm repo add stable https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

# helm search repo stable

# helm repo update

注:Helm3以上版本无需再安装 Tiller

Master2 & Master3

以 Master身份加入集群

kubeadm join wx-cloudnative-master.api:6443 --token nr3hgx.29i5i0vfobqzdde2 \

--discovery-token-ca-cert-hash sha256:9b908cded64d2193c89ab9a3eb54867a93fab59aa0acb56e48e1bac9d985c151

补充:如果忘记 token,可以重新获取

# kubeadm token create --print-join-command

# kubeadm init phase upload-certs --upload-certs

...

Using certificate key:

9657d13637814316fe8284e0ef167106b13acdad30279dbc29a804f82cedb973

# kubeadm join wx-cloudnative-master.api:6443 \

--token nr3hgx.29i5i0vfobqzdde2 \

--discovery-token-ca-cert-hash sha256:9b908cded64d2193c89ab9a3eb54867a93fab59aa0acb56e48e1bac9d985c151 \

--control-plane --certificate-key 9657d13637814316fe8284e0ef167106b13acdad30279dbc29a804f82cedb973

去除两台 Master节点的 NoSchedule污点标记,同时承担 Worker功能

# kubectl taint nodes wx-cloudnative-master-2 node-role.kubernetes.io/master:NoSchedule-

# kubectl taint nodes wx-cloudnative-master-3 node-role.kubernetes.io/master:NoSchedule-

Woker1 & Worker2

以 Woker角色加入集群

# kubeadm join wx-cloudnative-master.api:6443 \

--token nr3hgx.29i5i0vfobqzdde2 \

--discovery-token-ca-cert-hash sha256:9b908cded64d2193c89ab9a3eb54867a93fab59aa0acb56e48e1bac9d985c151

集群状态

# kubectl get nodes

NAME STATUS ROLES AGE VERSION

wx-cloudnative-master-1 Ready master 70d v1.19.2

wx-cloudnative-master-2 Ready master 70d v1.19.2

wx-cloudnative-master-3 Ready master 70d v1.19.2

wx-cloudnative-worker-1 Ready <none> 70d v1.19.2

wx-cloudnative-worker-2 Ready <none> 70d v1.19.2

Ingress(Nginx)

节点设置

使用 Master2 & Master3 的 NodePort作为所有 Ingress资源的出口

所以这个两个节点专门承载 Ingress Pod,用自定义标签绑定(ingresscontroller=member)

# kubectl label nodes wx-cloudnative-master-2 ingresscontroller=member

# kubectl label nodes wx-cloudnative-master-3 ingresscontroller=member

# kubectl get nodes --show-labels | grep master

wx-cloudnative-master-1 Ready master 70d v1.19.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=wx-cloudnative-master-1,kubernetes.io/os=linux,node-role.kubernetes.io/master=

wx-cloudnative-master-2 Ready master 70d v1.19.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,ingresscontroller=member,kubernetes.io/arch=amd64,kubernetes.io/hostname=wx-cloudnative-master-2,kubernetes.io/os=linux,node-role.kubernetes.io/master=

wx-cloudnative-master-3 Ready master 70d v1.19.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,ingresscontroller=member,kubernetes.io/arch=amd64,kubernetes.io/hostname=wx-cloudnative-master-3,kubernetes.io/os=linux,node-role.kubernetes.io/master=

Nginx Ingress Controller

https://docs.nginx.com/nginx-ingress-controller/

配置及安装,根据 Label在特定节点以 Daemonset成员形式拉起 Pod

# helm fetch nginx-stable/nginx-ingress

# tar xzvf nginx-ingress-0.7.0.tgz

# cd nginx-ingress

# vim values.yaml

line7 - kind: daemonset

line23 - hostNetwork: true

line87 - nodeSelector: {ingresscontroller: member}

line120 - replicaCount: 2

line141 - setAsDefaultIngress: true

line191 - type: NodePort

专用 kube-ingress命名空间

# kubectl create namespace kube-ingress

# helm install cloudnativengingress . -n kube-ingress

# kubectl get pods -n kube-ingress -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

cloudnativengingress-nginx-ingress-4rdt6 1/1 Running 2 56d 10.135.204.45 wx-cloudnative-master-3 <none> <none>

cloudnativengingress-nginx-ingress-77p5c 1/1 Running 0 20d 10.135.204.44 wx-cloudnative-master-2 <none> <none>

# kubectl get ingressclass -A

NAME CONTROLLER PARAMETERS AGE

nginx nginx.org/ingress-controller <none> 24h

# kubectl describe ingressclass nginx

Name: nginx

Labels: app.kubernetes.io/managed-by=Helm

Annotations: ingressclass.kubernetes.io/is-default-class: true

meta.helm.sh/release-name: cloudnativengingress

meta.helm.sh/release-namespace: kube-ingress

Controller: nginx.org/ingress-controller

Events: <none>

TLS 认证

使用 cert-manager作为集群的 certificate management controller

Certificate Manager

专用 kube-public命名空间

# kubectl create namespace kube-public

# helm repo add jetstack https://charts.jetstack.io

# helm repo update

# helm fetch jetstack/cert-manager

# tar xzvf cert-manager-v1.0.3.tgz

# cd cert-manager

使用主机网络端口

# vim values.yaml

line25 - namespace: "kube-public"

line27 - installCRDs: true

line270 - securePort: 10251

line281 - hostNetwork: true

安装

# helm install cert-manager . -n kube-public

NAME: cert-manager

LAST DEPLOYED: Fri Oct 23 17:42:28 2020

NAMESPACE: kube-public

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

cert-manager has been deployed successfully!

In order to begin issuing certificates, you will need to set up a ClusterIssuer

or Issuer resource (for example, by creating a 'letsencrypt-staging' issuer).

More information on the different types of issuers and how to configure them

can be found in our documentation:

https://cert-manager.io/docs/configuration/

For information on how to configure cert-manager to automatically provision

Certificates for Ingress resources, take a look at the `ingress-shim`

documentation:

https://cert-manager.io/docs/usage/ingress/

证书发布功能测试

# cat <<EOF > test-cert-resources.yaml

apiVersion: v1

kind: Namespace

metadata:

name: cert-manager-test

---

apiVersion: cert-manager.io/v1

kind: Issuer

metadata:

name: test-selfsigned

namespace: cert-manager-test

spec:

selfSigned: {}

---

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

name: selfsigned-cert

namespace: cert-manager-test

spec:

dnsNames:

- cloudnative.wx

secretName: selfsigned-cert-tls

issuerRef:

name: test-selfsigned

EOF

# kubectl apply -f test-cert-resources.yaml

namespace/cert-manager-test created

issuer.cert-manager.io/test-selfsigned created

certificate.cert-manager.io/selfsigned-cert created

# kubectl describe certificate -n cert-manager-test

...

Spec:

Dns Names:

cloudnative.wx

Issuer Ref:

Name: test-selfsigned

Secret Name: selfsigned-cert-tls

...

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Issuing 94s cert-manager Issuing certificate as Secret does not exist

Normal Generated 94s cert-manager Stored new private key in temporary Secret resource "selfsigned-cert-5n9r4"

Normal Requested 94s cert-manager Created new CertificateRequest resource "selfsigned-cert-vdxz4"

Normal Issuing 94s cert-manager The certificate has been successfully issued

# kubectl delete -f test-cert-resources.yaml

ACME(公网环境)

cert-manager北向对接各类证书签发机构,若对外网开放,可使用 ACME

cert-manager 提供了 Issuer 和 ClusterIssuer 两种用于创建签发机构的自定义资源对象,Issuer 只能用来签发自己所在 namespace 下的证书,ClusterIssuer 可以签发任意 namespace 下的证书

# vim acme-clusterissuer.yaml

apiVersion: cert-manager.io/v1alpha2

kind: ClusterIssuer

metadata:

name: letsencrypt-staging

spec:

acme:

email: zeastion@live.cn

server: https://acme-staging-v02.api.letsencrypt.org/directory

privateKeySecretRef:

name: cloudnative-issure-account-key

solvers:

- http01:

ingress:

class: nginx

# kubectl apply -f acme-clusterissuer.yaml

# kubectl get clusterissuer -o wide

NAME READY STATUS AGE

letsencrypt-staging True The ACME account was registered with the ACME server 5m19s

# kubectl describe clusterissuer letsencrypt-staging

Name: letsencrypt-staging

Namespace:

Labels: <none>

Annotations: <none>

API Version: cert-manager.io/v1

Kind: ClusterIssuer

Metadata:

...

Self Link: /apis/cert-manager.io/v1/clusterissuers/letsencrypt-staging

UID: bbf6287b-d05f-40fa-968c-c2e6378d077c

Spec:

Acme:

Email: zeastion@live.cn

Preferred Chain:

Private Key Secret Ref:

Name: cloudnative-issure-account-key

Server: https://acme-staging-v02.api.letsencrypt.org/directory

Solvers:

http01:

Ingress:

Class: nginx

Status:

Acme:

Last Registered Email: zeastion@live.cn

Uri: https://acme-staging-v02.api.letsencrypt.org/acme/acct/16302840

Conditions:

Last Transition Time: 2020-10-26T07:40:28Z

Message: The ACME account was registered with the ACME server

Reason: ACMEAccountRegistered

Status: True

Type: Ready

Events: <none>

给 Ingress resources自动签发证书

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

# add an annotation indicating the issuer to use.

cert-manager.io/cluster-issuer: *nameOfClusterIssuer*

kubernetes.io/tls-acme: "true"

name: myIngress

...

spec:

...

tls: # < placing a host in the TLS config will indicate a certificate should be created

- hosts:

- *example.com*

secretName: *myingress-cert* # < cert-manager will store the created certificate in this secret.

CA(内部环境)

办公网等内部环境使用,简化采用自建 CA即可

创建 CA private key

# openssl genrsa -out ca.key 2048

创建 self signed Certificate

# openssl req -x509 -new -nodes -key ca.key -subj "/CN=CloudNative" -days 3650 -reqexts v3_req -extensions v3_ca -out ca.crt

将以上密钥对存储于 Secret资源内

# kubectl create secret tls ca-key-pair --cert=ca.crt --key=ca.key --namespace=kube-public

# kubectl describe secret ca-key-pair -n kube-public

Name: ca-key-pair

Namespace: kube-public

Labels: <none>

Annotations: <none>

Type: kubernetes.io/tls

Data

====

tls.crt: 1099 bytes

tls.key: 1675 bytes

创建 CA ClusterIssuer,引用以上 Secret

# vim ca-clusterissuer.yaml

apiVersion: cert-manager.io/v1alpha2

kind: ClusterIssuer

metadata:

name: ca-clusterissuer

namespace: kube-public

spec:

ca:

secretName: ca-key-pair

查看 clusterIssuer资源

# kubectl describe clusterissuer ca-clusterissuer

Name: ca-clusterissuer

Namespace:

Labels: <none>

Annotations: <none>

API Version: cert-manager.io/v1

Kind: ClusterIssuer

Metadata:

Creation Timestamp: 2020-12-10T09:55:59Z

Generation: 1

Managed Fields:

API Version: cert-manager.io/v1

Fields Type: FieldsV1

fieldsV1:

f:status:

f:conditions:

Manager: controller

Operation: Update

Time: 2020-12-10T09:55:56Z

API Version: cert-manager.io/v1alpha2

Fields Type: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.:

f:kubectl.kubernetes.io/last-applied-configuration:

f:spec:

.:

f:ca:

.:

f:secretName:

Manager: kubectl-client-side-apply

Operation: Update

Time: 2020-12-10T09:55:59Z

Resource Version: 26235249

Self Link: /apis/cert-manager.io/v1/clusterissuers/ca-clusterissuer

UID: edc36778-a49e-4e27-bfa5-900a7a40edbb

Spec:

Ca:

Secret Name: ca-key-pair

Status:

Conditions:

Last Transition Time: 2020-12-10T09:55:56Z

Message: Signing CA verified

Reason: KeyPairVerified

Status: True

Type: Ready

Events: <none>

给 Ingress resources自动签发证书

...

annotations:

kubernetes.io/ingress.class: nginx

cert-manager.io/cluster-issuer: "ca-clusterissuer"

nginx.org/ssl-services: "*ServiceName*"

...

Kubernetes Dashboard

https://github.com/kubernetes/kubernetes/blob/master/cluster/addons/dashboard/dashboard.yaml

https://artifacthub.io/packages/helm/k8s-dashboard/kubernetes-dashboard

使用 Helm安装

# helm repo add kubernetes-dashboard https://kubernetes.github.io/dashboard/

# helm fetch kubernetes-dashboard/kubernetes-dashboard

# tar xzvf kubernetes-dashboard-2.8.2.tgz

# cd kubernetes-dashboard/

关联 CA ClusterIssuer

# vim values.yaml

line125 - enabled: true

...

annotations:

kubernetes.io/ingress.class: nginx

cert-manager.io/cluster-issuer: "ca-clusterissuer"

nginx.org/ssl-services: "cnk8sdashboard-kubernetes-dashboard"

#kubernetes.io/tls-acme: 'true'

#cert-manager.io/cluster-issuer: "letsencrypt-staging"

...

hosts:

- k8sdashboard.cloudnative.wx

tls:

- secretName: k8sdashboard.cloudnative.wx

hosts:

- k8sdashboard.cloudnative.wx

...

创建

# kubectl create namespace kube-dashboard

# helm install cnk8sdashboard . -n kube-dashboard

增加访问账号

https://github.com/kubernetes/dashboard/blob/master/docs/user/access-control/creating-sample-user.md

# vim admin-serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin

namespace: kube-dashboard

# vim admin-clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin

namespace: kube-dashboard

# kubectl apply -f admin-serviceaccount.yaml

# kubectl apply -f admin-clusterrolebinding.yaml

使用得到的 token信息访问 https://k8sdashboard.cloudnative.wx

# kubectl get secret -n kube-dashboard | grep admin

admin-token-qf47f kubernetes.io/service-account-token 3 47m

# kubectl describe secret admin-token-qf47f -n kube-dashboard | grep token:

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IkFVZ0picFhwbVpCSnRFcHN4QmktbkpsNWU3ZmV5aElmZEVtUkd1N1cwM0EifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi10b2tlbi1xZjQ3ZiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJhZG1pbiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjIyOTg0NzliLWZhMjUtNGU4OS04YzZjLWM4Y2VjNWJkZTZmYiIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLWRhc2hib2FyZDphZG1pbiJ9.Jo0ORC9MplqB4L3rWd-YrgfkQYrPjkvRUoYgReSQw8p4hzbcqF1rB-o--NtOroyrOoFWt4KK47oHectZeE8skbNrlLRYZGWUudmeu3IBdS5ivu9REi28Wm1eS9st5bkd-JL-iko0SI7xo3DKvo2nu8PxiuUKHiuJqdPUqTGOPhjbE1hFx05RIrRoG1ta3dSrV4SxmkS3HEdanQRSGtcM3zu6W2r9pdYrVwJnEH8Rx5Dz4g0XAy8Oli18pHNpthXo8TmUyrfYa1a7i1z50DCsKhdw9L6rhlPyIG1Akk_g7WqCxhB65KI-ZuW3qhbfBX6TmoQUGyBVFEFakTv_QmG5RA

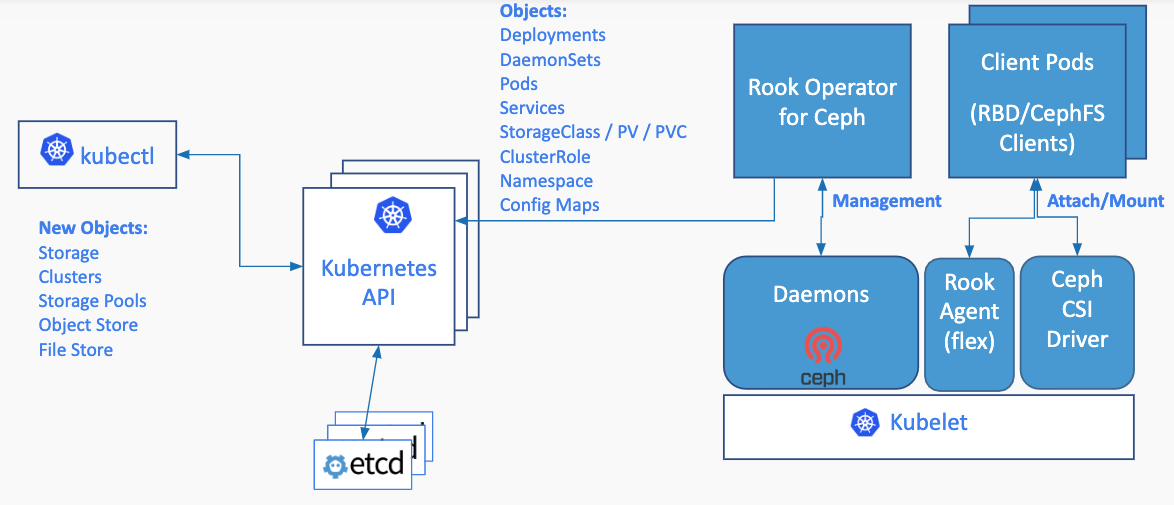

Ceph (Rook)

Master2 Master3 Worker2 Worker3 各配有 12块 3TB数据盘,单盘作为一个 OSD

使用 Helm安装

# kubectl create namespace rook-ceph

# helm repo add rook-release https://charts.rook.io/release

# helm search repo rook-ceph --versions

# helm install cloudnativeceph -n rook-ceph rook-release/rook-ceph

# helm ls -n rook-ceph

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

cloudnativeceph rook-ceph 1 2020-10-16 13:53:55.349112672 +0800 CST deployed rook-ceph-v1.4.5

创建集群

参考:

https://github.com/rook/rook/blob/master/Documentation/ceph-cluster-crd.md https://github.com/rook/rook.github.io/blob/master/docs/rook/v0.7/cluster-crd.md

下载 cluster.yaml (https://github.com/rook/rook/blob/release-1.4/cluster/examples/kubernetes/ceph/cluster.yaml)

修改 node字段,使用 sdb ~ sdm 12块盘

# vim rook/cluster.yaml

line72 - provider: host

...

storage:

useAllNodes: false

useAllDevices: false

config:

nodes:

- name: "wx-cloudnative-master-2"

deviceFilter: "^sd[b-m]"

- name: "wx-cloudnative-master-3"

deviceFilter: "^sd[b-m]"

- name: "wx-cloudnative-worker-1"

deviceFilter: "^sd[b-m]"

- name: "wx-cloudnative-worker-2"

deviceFilter: "^sd[b-m]"

创建

# kubectl create -f cluster.yaml

# kubectl get pods -n rook-ceph

NAME READY STATUS RESTARTS AGE

csi-cephfsplugin-c78s4 3/3 Running 9 69d

csi-cephfsplugin-f5d7f 3/3 Running 6 69d

csi-cephfsplugin-jhsfg 3/3 Running 6 69d

csi-cephfsplugin-provisioner-5c65b94c8d-b2q6k 6/6 Running 7 21d

csi-cephfsplugin-provisioner-5c65b94c8d-xmpqf 6/6 Running 17 69d

csi-cephfsplugin-zn42q 3/3 Running 9 69d

csi-rbdplugin-64tkq 3/3 Running 6 69d

csi-rbdplugin-mvf45 3/3 Running 9 69d

csi-rbdplugin-provisioner-569c75558-8xqbs 6/6 Running 7 21d

csi-rbdplugin-provisioner-569c75558-s68gx 6/6 Running 20 59d

csi-rbdplugin-vphdj 3/3 Running 9 69d

csi-rbdplugin-xshx2 3/3 Running 6 69d

rook-ceph-crashcollector-wx-cloudnative-master-2-669bc8587jrl6n 1/1 Running 3 69d

rook-ceph-crashcollector-wx-cloudnative-master-3-767f4c9f9gt2hp 1/1 Running 2 69d

rook-ceph-crashcollector-wx-cloudnative-worker-1-59ff4fcf6972sj 1/1 Running 1 21d

rook-ceph-crashcollector-wx-cloudnative-worker-2-5ffffb554k72nw 1/1 Running 1 21d

rook-ceph-mgr-a-8684b6f644-q2ks8 1/1 Running 6 69d

rook-ceph-mon-a-5687786c6d-qbn6n 1/1 Running 1 21d

rook-ceph-mon-b-798f7d9c5f-cx6cj 1/1 Running 1 21d

rook-ceph-mon-c-58cd98ff65-9pnsx 1/1 Running 3 68d

rook-ceph-operator-858bc99959-n82dm 1/1 Running 3 59d

rook-ceph-osd-0-7d9cc585b5-rbzpr 1/1 Running 1 21d

rook-ceph-osd-1-6cc88f6b6d-qcdfb 1/1 Running 12 69d

rook-ceph-osd-10-7fd649f67b-fl29p 1/1 Running 12 69d

rook-ceph-osd-11-7fd6c55c68-klldb 1/1 Running 14 69d

rook-ceph-osd-12-55cbd47d8-9m7dx 1/1 Running 1 21d

rook-ceph-osd-13-75989ff544-9w642 1/1 Running 1 21d

rook-ceph-osd-14-6fb7d9bb4d-bfxwz 1/1 Running 12 69d

rook-ceph-osd-15-6f899f5878-pmgwk 1/1 Running 14 69d

rook-ceph-osd-16-76957878c8-8r9pt 1/1 Running 1 21d

rook-ceph-osd-17-fb5859cf-j7448 1/1 Running 1 21d

rook-ceph-osd-18-68cb5f774c-fxhmk 1/1 Running 12 69d

rook-ceph-osd-19-dbbf78f55-fzjx2 1/1 Running 1 21d

rook-ceph-osd-2-68559b959f-7wvp8 1/1 Running 1 21d

rook-ceph-osd-20-84d687774d-8vj94 1/1 Running 14 69d

rook-ceph-osd-21-f585fffbf-28bzv 1/1 Running 1 21d

rook-ceph-osd-22-78d996c454-6gf6c 1/1 Running 12 69d

rook-ceph-osd-23-5b889cb4f7-t4zm6 1/1 Running 1 21d

rook-ceph-osd-24-569b9f9755-9cbsx 1/1 Running 14 69d

rook-ceph-osd-25-56c4c54758-cpn96 1/1 Running 1 21d

rook-ceph-osd-26-79ffd5f9dc-csft5 1/1 Running 12 69d

rook-ceph-osd-27-67fbc74f5c-ct9gw 1/1 Running 1 21d

rook-ceph-osd-28-c9747946-zpwms 1/1 Running 14 69d

rook-ceph-osd-29-7b5b965487-qxhnj 1/1 Running 1 21d

rook-ceph-osd-3-78679764b9-6vh4f 1/1 Running 14 69d

rook-ceph-osd-30-d9cf5f999-8d4nn 1/1 Running 12 69d

rook-ceph-osd-31-57cd898cd9-qd2nz 1/1 Running 1 21d

rook-ceph-osd-32-d6cb85666-jntgr 1/1 Running 14 69d

rook-ceph-osd-33-5856cbcf9-rcxbg 1/1 Running 1 21d

rook-ceph-osd-34-56df5fdb9-2dhrs 1/1 Running 1 21d

rook-ceph-osd-35-7d484d6b7b-sfk9w 1/1 Running 12 69d

rook-ceph-osd-36-97bdc7454-pss8l 1/1 Running 14 69d

rook-ceph-osd-37-689455458b-m2lkb 1/1 Running 1 21d

rook-ceph-osd-38-5cb946d6d8-xgb7c 1/1 Running 1 21d

rook-ceph-osd-39-d688d54c9-p695j 1/1 Running 12 69d

rook-ceph-osd-4-546b44c79-pmwzb 1/1 Running 1 21d

rook-ceph-osd-40-7b4b4d79cb-72rrm 1/1 Running 14 69d

rook-ceph-osd-41-66858b66c4-lt92w 1/1 Running 1 21d

rook-ceph-osd-42-7d46c5cb7c-d4vj4 1/1 Running 1 21d

rook-ceph-osd-43-744787dd9c-pns4s 1/1 Running 12 69d

rook-ceph-osd-44-7df764cd6-b2kkr 1/1 Running 14 69d

rook-ceph-osd-45-58f74c7b85-k698f 1/1 Running 1 21d

rook-ceph-osd-46-8c99fb6cc-cn7mm 1/1 Running 12 69d

rook-ceph-osd-47-787f9567f5-jf9pk 1/1 Running 14 69d

rook-ceph-osd-5-c79df46bc-rszk4 1/1 Running 12 69d

rook-ceph-osd-6-6d85cdd88f-rzv7x 1/1 Running 1 21d

rook-ceph-osd-7-65d9865cc7-x7b2k 1/1 Running 14 69d

rook-ceph-osd-8-674f88bdb6-g59jr 1/1 Running 1 21d

rook-ceph-osd-9-c88985bd8-wplc6 1/1 Running 1 21d

rook-ceph-osd-prepare-wx-cloudnative-master-2-6cdtd 0/1 Completed 0 7h21m

rook-ceph-osd-prepare-wx-cloudnative-master-3-q6rsv 0/1 Completed 0 7h21m

rook-ceph-osd-prepare-wx-cloudnative-worker-1-zlnzs 0/1 Completed 0 7h21m

rook-ceph-osd-prepare-wx-cloudnative-worker-2-j6rk7 0/1 Completed 0 7h21m

rook-ceph-tools-7b956ff5dc-bbhrl 1/1 Running 3 68d

rook-discover-k4mhh 1/1 Running 2 69d

rook-discover-v8g45 1/1 Running 4 69d

rook-discover-xxvkf 1/1 Running 2 69d

rook-discover-zntr4 1/1 Running 3 69d

查看磁盘变化

# lsblk -f

NAME FSTYPE LABEL UUID MOUNTPOINT

sda

└─sda1 xfs 555f0ba1-80c2-45a3-9fb6-7a61abea08bd /

sdb LVM2_member SDcVYN-mJZh-VSa1-82Gx-UntZ-XSf4-g9oQHY

└─ceph--c05a90c0--f9df--424e--bdaa--332b59c5aeb1-osd--data--6aa9bb69--b3ec--44e6--81bc--aae7f808aaeb

sr0 iso9660 CentOS 7 x86_64 2020-04-22-00-51-40-00

使用管理工具 toolbox

# kubectl create -f rook/toolbox.yaml

# kubectl -n rook-ceph get pod -l "app=rook-ceph-tools"

NAME READY STATUS RESTARTS AGE

rook-ceph-tools-6bdcd78654-l4szw 1/1 Running 0 30s

查看状态

# kubectl -n rook-ceph exec -it $(kubectl -n rook-ceph get pod -l "app=rook-ceph-tools" -o jsonpath='{.items[0].metadata.name}') bash

# ceph status

cluster:

id: e37f16af-b8c6-4b8c-b88d-e8746e504e65

health: HEALTH_OK

services:

mon: 3 daemons, quorum a,b,c (age 2w)

mgr: a(active, since 7h)

osd: 48 osds: 48 up (since 2w), 48 in (since 8w)

data:

pools: 2 pools, 33 pgs

objects: 1.19k objects, 3.4 GiB

usage: 62 GiB used, 131 TiB / 131 TiB avail

pgs: 33 active+clean

io:

client: 14 KiB/s wr, 0 op/s rd, 0 op/s wr

# ceph osd status

ID HOST USED AVAIL WR OPS WR DATA RD OPS RD DATA STATE

0 wx-cloudnative-worker-1 1476M 2792G 0 0 0 0 exists,up

1 wx-cloudnative-master-3 1235M 2792G 0 0 0 0 exists,up

2 wx-cloudnative-worker-2 1245M 2792G 0 0 0 0 exists,up

...

46 wx-cloudnative-master-3 1242M 2792G 0 0 0 0 exists,up

47 wx-cloudnative-master-2 1358M 2792G 0 0 0 0 exists,up

# ceph df

--- RAW STORAGE ---

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 131 TiB 131 TiB 14 GiB 62 GiB 0.05

TOTAL 131 TiB 131 TiB 14 GiB 62 GiB 0.05

--- POOLS ---

POOL ID STORED OBJECTS USED %USED MAX AVAIL

device_health_metrics 1 1.8 MiB 48 1.8 MiB 0 41 TiB

replicapool 2 3.4 GiB 1.15k 3.4 GiB 0 41 TiB

# rados df

POOL_NAME USED OBJECTS CLONES COPIES MISSING_ON_PRIMARY UNFOUND DEGRADED RD_OPS RD WR_OPS WR USED COMPR UNDER COMPR

device_health_metrics 1.8 MiB 48 0 144 0 0 0 3263 3.9 MiB 3312 3.2 MiB 0 B 0 B

replicapool 3.4 GiB 1145 0 3435 0 0 0 106945 998 MiB 60285206 614 GiB 0 B 0 B

total_objects 1193

total_used 62 GiB

total_avail 131 TiB

total_space 131 TiB

Ceph Dashboard

配置 Ingress外部访问

# vim dashboard-external-https.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: rook-ceph-mgr-dashboard

namespace: rook-ceph

annotations:

kubernetes.io/ingress.class: "nginx"

cert-manager.io/cluster-issuer: "ca-clusterissuer"

nginx.org/ssl-services: "rook-ceph-mgr-dashboard"

#kubernetes.io/tls-acme: "true"

#cert-manager.io/cluster-issuer: "letsencrypt-staging"

spec:

tls:

- hosts:

- rook-ceph.cloudnative.wx

secretName: rook-ceph.cloudnative.wx

rules:

- host: rook-ceph.cloudnative.wx

http:

paths:

- path: /

backend:

serviceName: rook-ceph-mgr-dashboard

servicePort: https-dashboard

# kubectl create -f rook/dashboard-external-https.yaml

# kubectl -n rook-ceph get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

csi-cephfsplugin-metrics ClusterIP 10.20.226.50 <none> 8080/TCP,8081/TCP 69d

csi-rbdplugin-metrics ClusterIP 10.20.163.28 <none> 8080/TCP,8081/TCP 69d

rook-ceph-mgr ClusterIP 10.20.102.232 <none> 9283/TCP 69d

rook-ceph-mgr-dashboard ClusterIP 10.20.179.9 <none> 8443/TCP 69d

# kubectl describe ingress rook-ceph-mgr-dashboard -n rook-ceph

Warning: extensions/v1beta1 Ingress is deprecated in v1.14+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress

Name: rook-ceph-mgr-dashboard

Namespace: rook-ceph

Address:

Default backend: default-http-backend:80 (<error: endpoints "default-http-backend" not found>)

TLS:

rook-ceph.cloudnative.wx terminates rook-ceph.cloudnative.wx

Rules:

Host Path Backends

---- ---- --------

rook-ceph.cloudnative.wx

/ rook-ceph-mgr-dashboard:https-dashboard 10.135.204.44:8443)

Annotations: cert-manager.io/cluster-issuer: ca-clusterissuer

kubernetes.io/ingress.class: nginx

nginx.org/ssl-services: rook-ceph-mgr-dashboard

Events: <none>

网页访问 https://rook-ceph.cloudnative.wx

用户名:admin 密码: 执行如下命令查看输出

# kubectl -n rook-ceph get secret rook-ceph-dashboard-password -o jsonpath="{['data']['password']}" | base64 --decode && echo

storageclass

# wget https://github.com/rook/rook/raw/release-1.4/cluster/examples/kubernetes/ceph/csi/rbd/storageclass.yaml

# mv storageclass.yaml block-storageclass.yaml

# kubectl create -f rook/block-storageclass.yaml

# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

rook-ceph-block rook-ceph.rbd.csi.ceph.com Delete Immediate true 113m

标记默认 StorageClass

# kubectl patch storageclass rook-ceph-block -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

storageclass.storage.k8s.io/rook-ceph-block patched

# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

rook-ceph-block (default) rook-ceph.rbd.csi.ceph.com Delete Immediate true 114m