K8S 企业容器云02 - Kubeadm 部署 K8S 1.16.0

Ubuntu 19.04 Kubernetes 1.16.0

环境

使用 kubeadm 搭建 1.16.0 版本 4 节点(1 master + 3 worker)Kubernetes 集群

| 角色 | 组件 | 服务器 |

|---|---|---|

| Master | - apiserver | k01 - 192.168.52.151 |

| - controller-manager | ||

| - scheduler | ||

| - etcd | ||

| - coredns | ||

| - weave-net | ||

| - kube-proxy | ||

| Worker | - kube-proxy | k02 - 192.168.52.152 |

| - weave-net | k03 - 192.168.52.153 | |

| - kubernetes-dashboard | k04 - 192.168.52.154 | |

| - csi-cephfsplugin | ||

| - csi-cephfsplugin-provisioner | ||

| - csi-rbdplugin | ||

| - csi-rbdplugin-provisioner | ||

| - rook-discover | ||

| - rook-ceph-operator | ||

| - rook-ceph-mgr | ||

| - rook-ceph-mon | ||

| - rook-ceph-osd |

ALL

部署操作分为全部节点(ALL)& MASTER & WORKER 上执行

禁用 SWAP

Kubernetes 基于 Pod 内存性能考虑,不适用 Swap 空间

# sed -i '/swap/ s/^/#/' /etc/fstab

# swapoff -a

配置系统源

使用阿里仓库

# vim /etc/apt/sources.list

deb http://mirrors.aliyun.com/ubuntu/ disco main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ disco main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ disco-security main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ disco-security main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ disco-updates main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ disco-updates main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ disco-backports main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ disco-backports main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ disco-proposed main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ disco-proposed main restricted universe multiverse

# vim /etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

同步 apt-key 并更新环境

# curl -s https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | sudo apt-key add -

# apt-get update

# apt-get upgrade

NTP

节点使用同一时钟源

# ln -snf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

# bash -c "echo 'Asia/Shanghai' > /etc/timezone"

# apt-get install -y ntpdate

# ntpdate ntp1.aliyun.com

Docker

Docker 镜像源配置阿里云加速器

# apt-get install docker.io

# vim /etc/docker/daemon.json

{

"registry-mirrors": ["https://32lrw8s6.mirror.aliyuncs.com"]

}

# systemctl daemon-reload

# systemctl enable docker.service

# systemctl restart docker

# docker info

...

Registry Mirrors:

https://32lrw8s6.mirror.aliyuncs.com/

...

Kubeadm

# apt-get install kubeadm

MASTER

Master 节点包括 k8s 基本组件、网络组件、Etcd、DNS

基本组件

使用阿里仓库,版本为 1.16.0

# kubeadm init --image-repository registry.aliyuncs.com/google_containers \

> --kubernetes-version v1.16.0 | tee /etc/kube-server-key

...

kubeadm join 192.168.52.151:6443 --token hpql37.c31wenbkv9fl06ur \

--discovery-token-ca-cert-hash sha256:013e5f7fd2a8d8a1b1450d76b71dfe364a2727230892cfdc371de743a998d974

...

包含用于访问 K8S 集群的授权信息的安全配置文件

# mkdir -p $HOME/.kube

# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

# chown $(id -u):$(id -g) $HOME/.kube/config

查看集群节点

# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k01 NotReady master 29m v1.16.0

# kubectl describe node k01

...

Ready False Tue, 17 Sep 2019 19:01:26 +0800 Tue, 17 Sep 2019 18:31:18 +0800 KubeletNotReady runtime network not ready: NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized

...

依赖网络的 Pod: coredns 处于 Pending 状态

# kubectl get pods

No resources found.

root@k01:~# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-bccdc95cf-2c4jp 0/1 Pending 0 35m

coredns-bccdc95cf-5j4ph 0/1 Pending 0 35m

etcd-k01 1/1 Running 0 35m

kube-apiserver-k01 1/1 Running 0 34m

kube-controller-manager-k01 1/1 Running 0 34m

kube-proxy-jwcl6 1/1 Running 0 35m

kube-scheduler-k01 1/1 Running 0 35m

Network

官网推荐的三方组件

https://kubernetes.io/docs/concepts/cluster-administration/addons/

网络组件选择 Weave

# kubectl apply -f "https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d '\n')"

serviceaccount/weave-net created

clusterrole.rbac.authorization.k8s.io/weave-net created

clusterrolebinding.rbac.authorization.k8s.io/weave-net created

role.rbac.authorization.k8s.io/weave-net created

rolebinding.rbac.authorization.k8s.io/weave-net created

daemonset.extensions/weave-net created

Weave 运行后 coredns 状态恢复正常

# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-bccdc95cf-2c4jp 1/1 Running 0 63m

coredns-bccdc95cf-5j4ph 1/1 Running 0 63m

etcd-k01 1/1 Running 1 63m

kube-apiserver-k01 1/1 Running 1 62m

kube-controller-manager-k01 1/1 Running 1 62m

kube-proxy-jwcl6 1/1 Running 1 63m

kube-scheduler-k01 1/1 Running 1 63m

weave-net-sqr66 2/2 Running 1 19m

WORKER

将 k02 k03 k04 加入集群

# kubeadm join 192.168.52.151:6443 --token cgddkk.1b171mshb849stmd \

> --discovery-token-ca-cert-hash sha256:dfcb0500f0ea572fae555120f0e1eed0632c2b0b310bc22778098aa82c7c00fb

若超过 24h 原 token 会失效,在 MASTER 执行重新生成

# kubeadm token create --print-join-command

kubeadm join 192.168.52.151:6443 --token 4e1xjd.vlm4jea79bnxoesg --discovery-token-ca-cert-hash sha256:dfcb0500f0ea572fae555120f0e1eed0632c2b0b310bc22778098aa82c7c00fb

MASTER

查看集群节点

# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k01 Ready master 26m v1.16.0 192.168.52.151 <none> Ubuntu 19.04 5.0.0-27-generic docker://18.9.7

k02 Ready <none> 5m41s v1.16.0 192.168.52.152 <none> Ubuntu 19.04 5.0.0-27-generic docker://18.9.7

k03 Ready <none> 4m28s v1.16.0 192.168.52.153 <none> Ubuntu 19.04 5.0.0-27-generic docker://18.9.7

k04 Ready <none> 100s v1.16.0 192.168.52.154 <none> Ubuntu 19.04 5.0.0-27-generic docker://18.9.7

查看服务

# kubectl get pods -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-58cc8c89f4-9k82j 1/1 Running 0 25m 10.32.0.3 k01 <none> <none>

kube-system coredns-58cc8c89f4-tnxr6 1/1 Running 0 25m 10.32.0.2 k01 <none> <none>

kube-system etcd-k01 1/1 Running 0 24m 192.168.52.151 k01 <none> <none>

kube-system kube-apiserver-k01 1/1 Running 0 24m 192.168.52.151 k01 <none> <none>

kube-system kube-controller-manager-k01 1/1 Running 0 24m 192.168.52.151 k01 <none> <none>

kube-system kube-proxy-4rhwd 1/1 Running 0 3m51s 192.168.52.153 k03 <none> <none>

kube-system kube-proxy-mr8zd 1/1 Running 0 5m4s 192.168.52.152 k02 <none> <none>

kube-system kube-proxy-qxt28 1/1 Running 0 63s 192.168.52.154 k04 <none> <none>

kube-system kube-proxy-x9knb 1/1 Running 0 25m 192.168.52.151 k01 <none> <none>

kube-system kube-scheduler-k01 1/1 Running 0 24m 192.168.52.151 k01 <none> <none>

kube-system weave-net-28d8x 2/2 Running 0 7m6s 192.168.52.151 k01 <none> <none>

kube-system weave-net-5tzzg 2/2 Running 1 3m51s 192.168.52.153 k03 <none> <none>

kube-system weave-net-9fb8g 2/2 Running 0 63s 192.168.52.154 k04 <none> <none>

kube-system weave-net-mlnrg 2/2 Running 0 5m4s 192.168.52.152 k02 <none> <none>

Dashboard

https://github.com/kubernetes/dashboard#kubernetes-dashboard

# wget https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml

替换镜像源

# vim kubernetes-dashboard.yaml

...

image: registry.aliyuncs.com/google_containers/kubernetes-dashboard-amd64:v1.10.1

...

创建

# kubectl apply -f kubernetes-dashboard.yaml

# kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

...

kubernetes-dashboard-5dc4c54b55-fsns7 1/1 Running 0 21s 10.44.0.2 k02 <none> <none>

...

*** 强制删除僵死 Pod

# kubectl delete pods <pod> --grace-period=0 --force

允许外部访问

# kubectl proxy --address='0.0.0.0' --accept-hosts='^*$'

配置登录权限 RBAC

补充

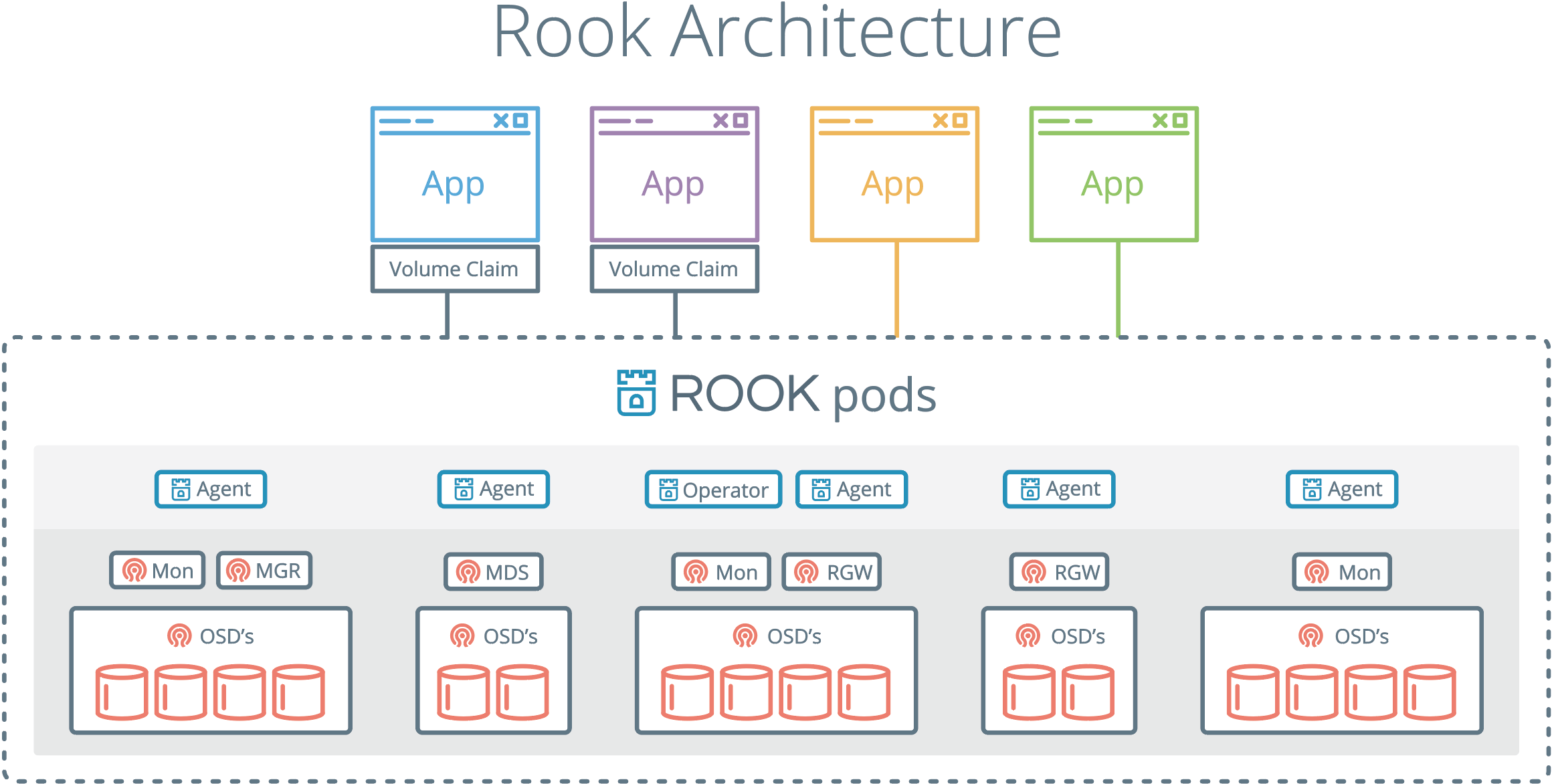

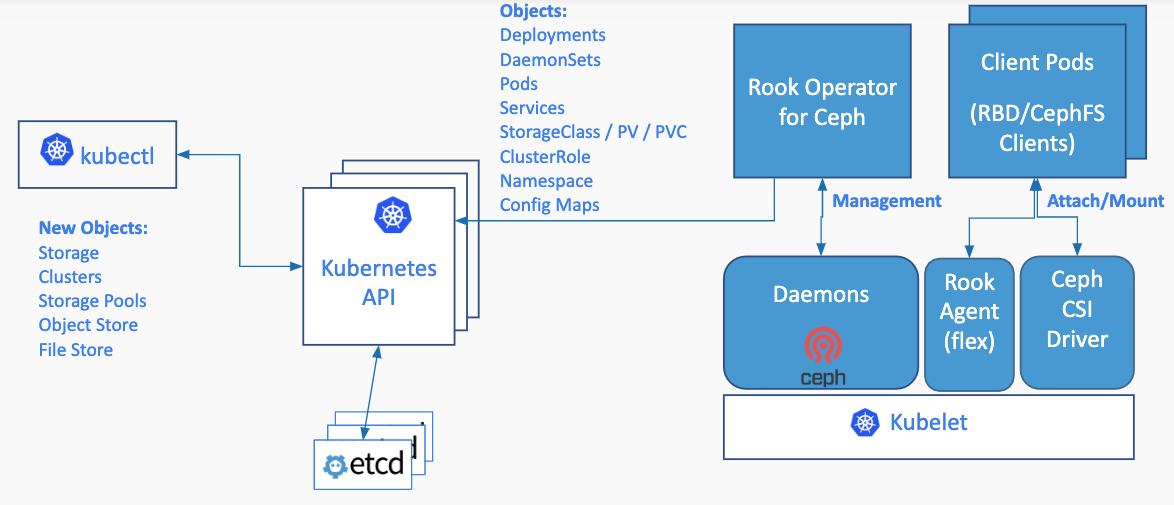

Rook

使用 Rook 包装的 Ceph 存储后端,实现自管理、自扩展和自修复

Ceph Rook 与 K8S 集成原理

从 Github 上下载 common.yaml & operator.yaml & cluster-test.yaml

https://github.com/rook/rook/tree/release-1.1/cluster/examples/kubernetes/ceph

拉起 Rook 管理器

# kubectl create -f common.yaml

# kubectl create -f operator.yaml

查看

# kubectl get pods -n rook-ceph -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

rook-ceph-operator-75d95cb868-zchzf 1/1 Running 0 114s 10.42.0.1 k04 <none> <none>

rook-discover-4nd8c 1/1 Running 0 70s 10.36.0.1 k03 <none> <none>

rook-discover-f54mr 1/1 Running 0 70s 10.44.0.1 k02 <none> <none>

rook-discover-m2ww5 1/1 Running 0 70s 10.42.0.2 k04 <none> <none>

创建 Rook Ceph 集群

# kubectl create -f cluster-test.yaml

# kubectl get pods -n rook-ceph -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

csi-cephfsplugin-94p76 3/3 Running 0 7m21s 192.168.52.153 k03 <none> <none>

csi-cephfsplugin-provisioner-7c44c4ff49-8cslj 4/4 Running 1 7m21s 10.44.0.3 k02 <none> <none>

csi-cephfsplugin-provisioner-7c44c4ff49-lvt72 4/4 Running 0 7m21s 10.36.0.2 k03 <none> <none>

csi-cephfsplugin-sb62l 3/3 Running 0 7m21s 192.168.52.152 k02 <none> <none>

csi-cephfsplugin-vm8ls 3/3 Running 0 7m21s 192.168.52.154 k04 <none> <none>

csi-rbdplugin-kvmhr 3/3 Running 0 7m21s 192.168.52.154 k04 <none> <none>

csi-rbdplugin-provisioner-7458d98547-4jkr4 5/5 Running 1 7m21s 10.42.0.3 k04 <none> <none>

csi-rbdplugin-provisioner-7458d98547-9jzdx 5/5 Running 0 7m21s 10.44.0.2 k02 <none> <none>

csi-rbdplugin-q2wrj 3/3 Running 0 7m21s 192.168.52.153 k03 <none> <none>

csi-rbdplugin-wbbx9 3/3 Running 0 7m21s 192.168.52.152 k02 <none> <none>

rook-ceph-mgr-a-6f5564fc9-wz5pp 1/1 Running 0 98s 10.44.0.5 k02 <none> <none>

rook-ceph-mon-a-5ddbf74d9b-lrl7p 1/1 Running 0 4m40s 10.36.0.3 k03 <none> <none>

rook-ceph-mon-b-f49477974-rxpvr 1/1 Running 0 4m25s 10.42.0.4 k04 <none> <none>

rook-ceph-mon-c-7b94888cc7-zx4lq 1/1 Running 0 3m37s 10.44.0.4 k02 <none> <none>

rook-ceph-operator-75d95cb868-zchzf 1/1 Running 0 25m 10.42.0.1 k04 <none> <none>

rook-ceph-osd-0-74479647f9-8s9tl 1/1 Running 0 60s 10.42.0.5 k04 <none> <none>

rook-ceph-osd-1-7fc649f866-d8f5g 1/1 Running 0 58s 10.36.0.4 k03 <none> <none>

rook-ceph-osd-2-6fbd987897-8qvkh 1/1 Running 0 56s 10.44.0.6 k02 <none> <none>

rook-ceph-osd-prepare-k02-p2kz2 0/1 Completed 0 66s 10.44.0.6 k02 <none> <none>

rook-ceph-osd-prepare-k03-ntxhx 0/1 Completed 0 66s 10.36.0.4 k03 <none> <none>

rook-ceph-osd-prepare-k04-bz4rp 0/1 Completed 0 66s 10.42.0.5 k04 <none> <none>

rook-discover-4nd8c 1/1 Running 0 24m 10.36.0.1 k03 <none> <none>

rook-discover-f54mr 1/1 Running 0 24m 10.44.0.1 k02 <none> <none>

rook-discover-m2ww5 1/1 Running 0 24m 10.42.0.2 k04 <none> <none>

管理工具 Rook Toolbox

# kubectl create -f toolbox.yaml

# kubectl get pods -n rook-ceph -l "app=rook-ceph-tools"

NAME READY STATUS RESTARTS AGE

rook-ceph-tools-775bb64d55-x4skv 1/1 Running 0 14s

查看 Ceph 集群状态

# kubectl -n rook-ceph exec -it $(kubectl -n rook-ceph get pod -l "app=rook-ceph-tools" -o jsonpath='{.items[0].metadata.name}') bash

# ceph status

cluster:

id: 5a1db4bc-94e1-4ba2-b96f-eeadeef1f28f

health: HEALTH_WARN

clock skew detected on mon.c

services:

mon: 3 daemons, quorum a,b,c (age 4m)

mgr: a(active, since 3m)

osd: 3 osds: 3 up (since 3m), 3 in (since 3m)

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 37 GiB used, 81 GiB / 117 GiB avail

pgs:

# ceph osd status

+----+------+-------+-------+--------+---------+--------+---------+-----------+

| id | host | used | avail | wr ops | wr data | rd ops | rd data | state |

+----+------+-------+-------+--------+---------+--------+---------+-----------+

| 0 | k04 | 12.2G | 26.9G | 0 | 0 | 0 | 0 | exists,up |

| 1 | k03 | 12.2G | 26.8G | 0 | 0 | 0 | 0 | exists,up |

| 2 | k02 | 12.1G | 26.9G | 0 | 0 | 0 | 0 | exists,up |

+----+------+-------+-------+--------+---------+--------+---------+-----------+

# ceph df

RAW STORAGE:

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 117 GiB 81 GiB 37 GiB 37 GiB 31.21

TOTAL 117 GiB 81 GiB 37 GiB 37 GiB 31.21

POOLS:

POOL ID STORED OBJECTS USED %USED MAX AVAIL

# rados df

POOL_NAME USED OBJECTS CLONES COPIES MISSING_ON_PRIMARY UNFOUND DEGRADED RD_OPS RD WR_OPS WR USED COMPR UNDER COMPR

total_objects 0

total_used 37 GiB

total_avail 81 GiB

total_space 117 GiB